Fortinet Acquires Next DLP Strengthens its Top-Tier Unified SASE Solution

Read the release

Developments in Artificial Intelligence (AI) and Machine Learning (ML) are booming. While these technologies have been in use for several years, the launch of ChatGPT, Google Bard, and other Large Language Models (LLM) introduced many to their powers and efficiencies, making the tools more readily available to the general public.

AI promises improved operational efficiency and the ability to scale services. Better yet, organizations no longer need to build these technologies themselves. Instead, they can leverage AI-as-a-Service (AIaaS) offerings to accelerate time to value.

|

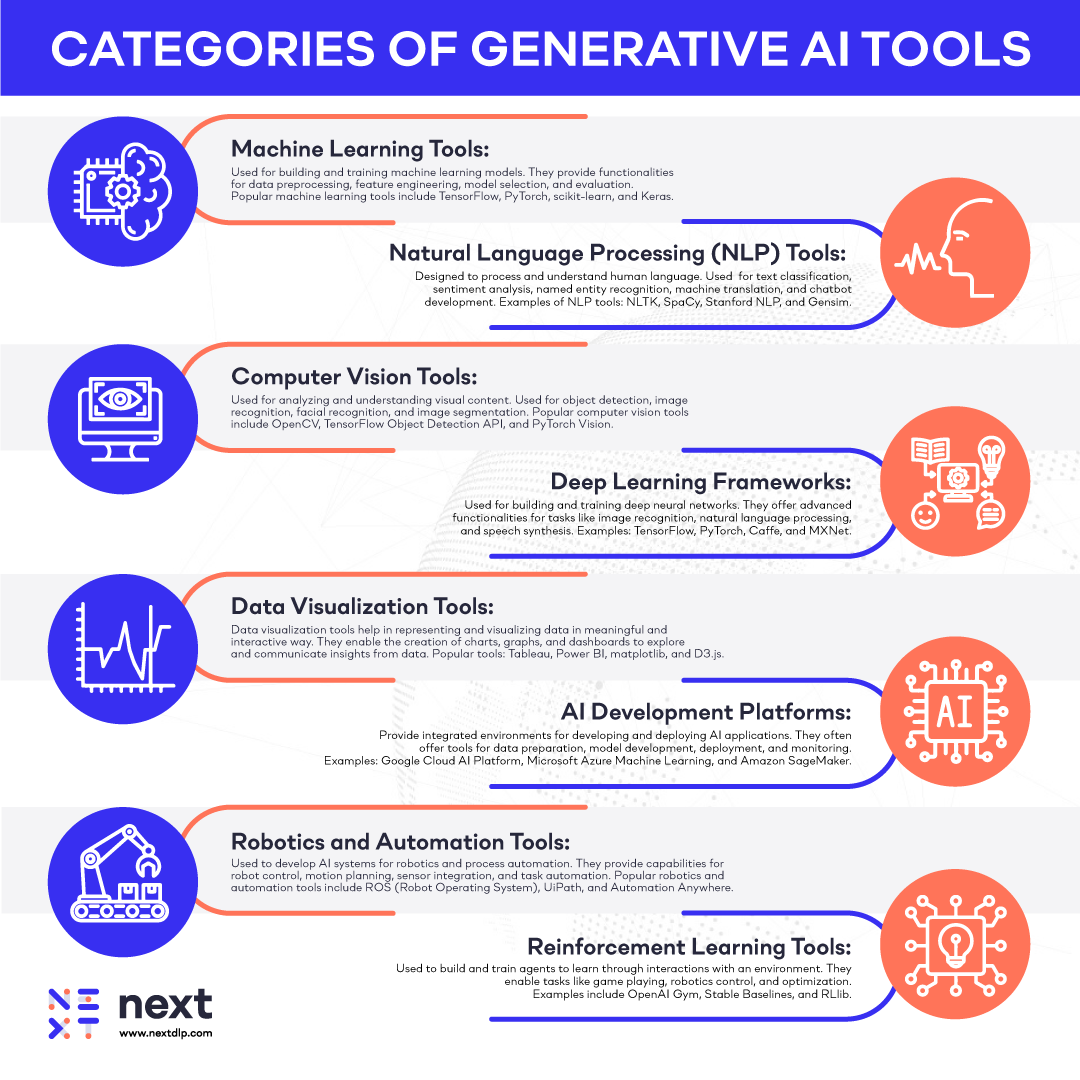

These can include:

|

While these solutions have many benefits, organizations should also do their due diligence to understand the third-party risk they present.

Information entered into any AI system may become part of the system’s data set. While OpenAI states they do not use content submitted through their API to improve their service, they can use content from non-API sources for that purpose. Most services will use feedback from users to improve their responses.

Depending on the specific system and how it is designed and implemented, this function, intended to improve the AI model, could put your IP at risk. For example, the system could use your images, text, or source code as examples or as the basis for answers provided to other users. Accidental inclusion in training data could expose trade secrets or inadvertently provide other users with similar images or product functionality. Similarly, a patent application or other IP uploaded to a language translation application may become part of that system’s training set.

This risk is why, after an employee uploaded proprietary source code to a system while trying to debug the code, Samsung banned employees from using public Chatbots. A single employee simply trying to get work done potentially exposed their IP and vulnerabilities in the semiconductors that an attacker could exploit. Another Samsung employee reportedly submitted confidential notes on an internal meeting to ChatGPT and asked it to create a presentation from the notes.

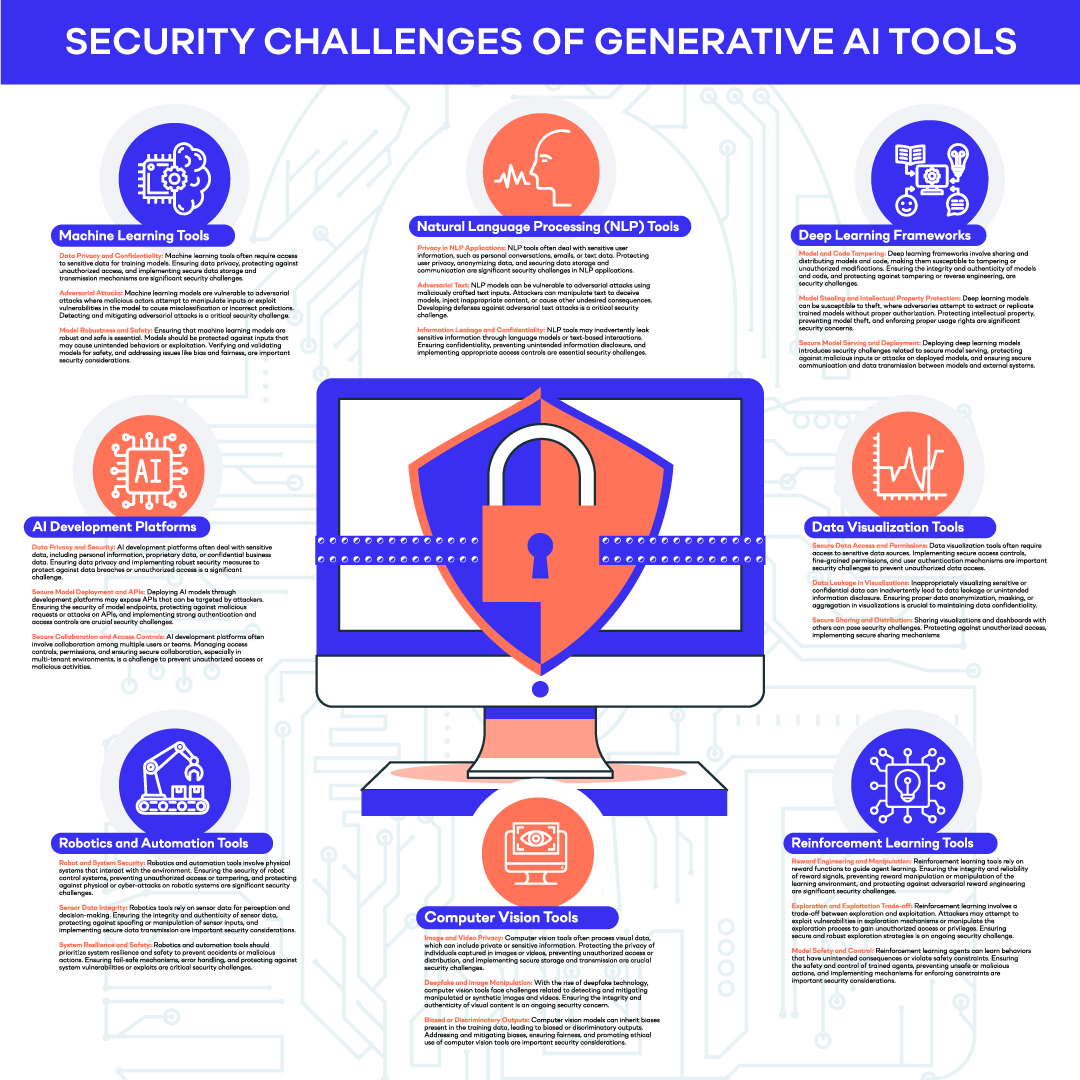

Emerging technologies bring both opportunities and challenges. As organizations adopt generative AI tools, it is essential to assess the potential risks involved. Conducting a thorough vendor assessment can help identify any security vulnerabilities and ensure appropriate security controls are in place. Risk assessments should be performed regularly to avoid emerging threats and protect sensitive data. Organizations can mitigate the risks of using generative AI tools by taking proactive measures and implementing robust security practices.

To reduce these risks, it is essential to carefully evaluate any AI system before entering your IP into it. Evaluation may involve assessing the system's security measures, encryption standards, data handling policies, and ownership agreements. From a data protection standpoint, acknowledge that AI systems, including Chatbots, search engines, text/image converters, are potential data exfiltration channels.

An AI system’s only source of information is its training set. In a public system with input from multiple entities, the output can potentially be based on proprietary data from other users. For example, a training set may include copyrighted material from books, patent applications, web pages, and scientific research. Suppose this AI system is used to create a new product or invention. In that case, the output may be subject to patent protection or IP rights by the user who originally submitted that information. Also, consider the opposite situation. The US Copyright Office recently ruled that the output from AI is not eligible for copyright protection unless it includes “sufficient human authorship.”

While OpenAI and Google Bard explicitly state that users own both input and output and do not use user-inputted information to augment their training base, other services may differ. Data privacy is a critical consideration when using generative AI tools. Organizations must review the terms and conditions of the service to understand how data is stored and handled. Ensuring that any data submitted to the service is encrypted and protected from unauthorized access is vital. Questions to ask vendors about data privacy include whether they store data, how long it is stored, and whether it is anonymized and encrypted. Organizations can protect sensitive information and comply with privacy regulations by prioritizing data privacy. AI users should be aware of the potential IP rights associated with the output generated by AI systems and take appropriate measures to protect their IP rights and respect the IP rights of others. A system's legal terms and conditions may assign rights to its output to a user, but it is unclear whether these systems have the legal right to make those assignments. Include your legal team when evaluating any AI system.

Many tools have restrictions for their use. For example, most chatbots have been programmed not to provide examples of malicious code, such as ransomware and viruses. Most will not engage in hate speech or illegal activities. There may also be consequences for violating the guidelines, ranging from warnings to account suspension or termination, depending on the severity of the violation.

However, inputs to AI and ML systems can be viewed as similar to inputs to other applications. In a software application, developers implement input validation to prevent attacks like SQL injection and cross-site scripting. AI systems are just coming to terms with system hacks. For example, indirect prompt injection is when an LLM is asked to analyze some text on the web and instead starts to take instructions from that text. Researchers have shown that this could be used to trick users of website chatbots into providing sensitive information. Other “jailbreaking” techniques can cause systems to violate controls and produce hateful content.

Include AI systems in your appropriate use policies to protect your organization against reputational damage. Have a reporting mechanism for unusual output and an incident response plan in case of an attack on the system.

Data provided to AI and ML systems may be stored by their providers. Suppose said data includes personal information from users, such as names, email addresses, and phone numbers. In that case, this data is subject to privacy regulations such as the California Consumer Privacy Act (CCPA), the Virginia Consumer Data Protection Act, Europe’s General Data Protection Regulation (GDPR), and similar regulations.

Some AI service providers, such as those producing social media content and demand generation emails, may integrate with other services such as Facebook accounts, content management systems, and sales automation systems. Depending on the service provider, this could expose sensitive data or cause it to be uploaded to the provider’s servers.

Review the terms and conditions of any service you use. If you use an AI system or service that stores data, you should ensure the data you submit is encrypted to protect it from unauthorized access. This review can help prevent data breaches and protect sensitive personal, financial, or other confidential information.

You should train users to practice cyber hygiene like any other third-party service. Maintaining good cyber hygiene is essential when using generative AI tools. Users should be trained on security awareness and best practices, such as using strong passwords and not sharing account credentials. Multi-factor authentication should be implemented for systems handling sensitive information. Organizations should also ensure that they have valid licenses for the AI tools they use and comply with the service's license agreements. By practicing good cyber hygiene, organizations can reduce the risk of unauthorized access and protect their sensitive data.

Train your users on security awareness and cyber hygiene. Institute strong password policies across all applications and use multi-factor authentication for systems handling sensitive information.

Finally, organizations should consider leveraging Data Loss Prevention to monitor and control the information submitted to such services. It is essential to have the ability to recognize and classify sensitive data as it is accessed and used. Organizations can proactively identify and mitigate the risk of data loss or unauthorized disclosure by implementing DLP measures when using generative AI tools. Some of these services can serve as exfiltration channels. Your DLP should include recognizing and classifying sensitive data as it is accessed and used. For example, suppose a user attempts to copy sensitive data into a query or adds customer information to a service. In that case, your DLP platform should recognize that attempt, track the steps leading up to the event, and intervene before the data is leaked.

Watch an on-demand demo to learn more about how Reveal helps with insider risk management and data loss prevention.

Artificial Intelligence (AI) is being implemented in many types of cybersecurity solutions. Following are some of the ways AI is currently being used to protect IT environments.

Implementing AI in cybersecurity must be done with the following potential risks in mind.

Yes, AI can pose various threats to cybersecurity. Threat actors can use AI to attack an IT environment in the following ways.

AI tools can be used to perpetrate business email compromise attacks that can deceive users into divulging sensitive data and cause reputational damage to the targeted organization.

Blog

Blog

Blog

Blog

Resources

Resources

Resources

Resources