Fortinet Acquires Next DLP Strengthens its Top-Tier Unified SASE Solution

Read the release

Large language models like ChatGPT have quickly become part of daily life. As exciting–and as useful–as these rapid technological innovations are, they present challenges to data security.

Companies are wary of large language models like ChatGPT because the AI model is continuously trained from the data users input into it. As a result, that information stands to become publicly available. In fact, Open AI warns, "any data that ChatGPT is exposed to, including user inputs and interactions, can potentially be stored and used to further train and improve the model," and further iterates the user's responsibility in data security: "it is important to handle user data with care and take appropriate measures to protect it from loss or unauthorized access."

Organizations are looking to protect themselves from unintended data leakage, where sensitive information is shared with language model services, and from exposure to intellectual property, where a language model may reproduce copyrighted content from its training data.

Stories of companies–large, small, and even government departments–scrambling to put policies in place around these kinds of tools have become increasingly common. Across an anonymized survey of our customer base, we can see that 50% of the group has employees using ChatGPT at work. Specifically, one in ten company-issued machines protected by the Reveal platform in this data set have been used to access ChatGPT. We’ve also seen that employees who work for larger companies are more likely to access ChatGPT: 97% of the larger accounts have employees who use ChatGPT in their daily work.

|

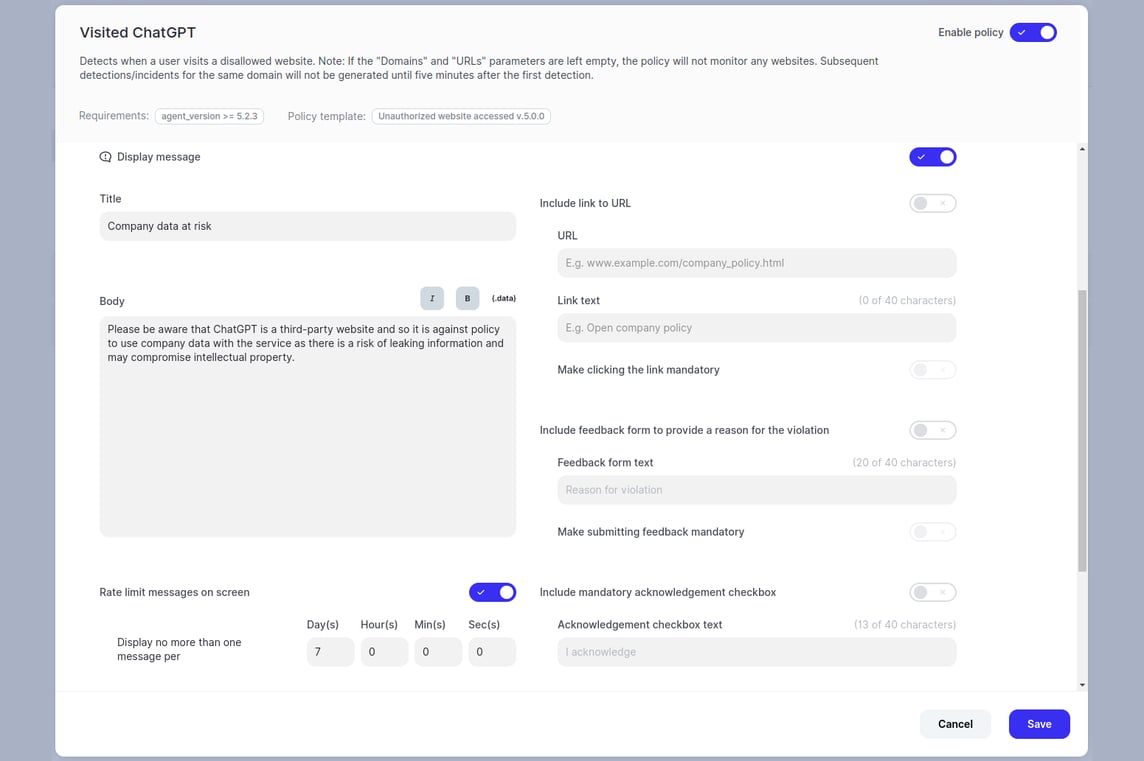

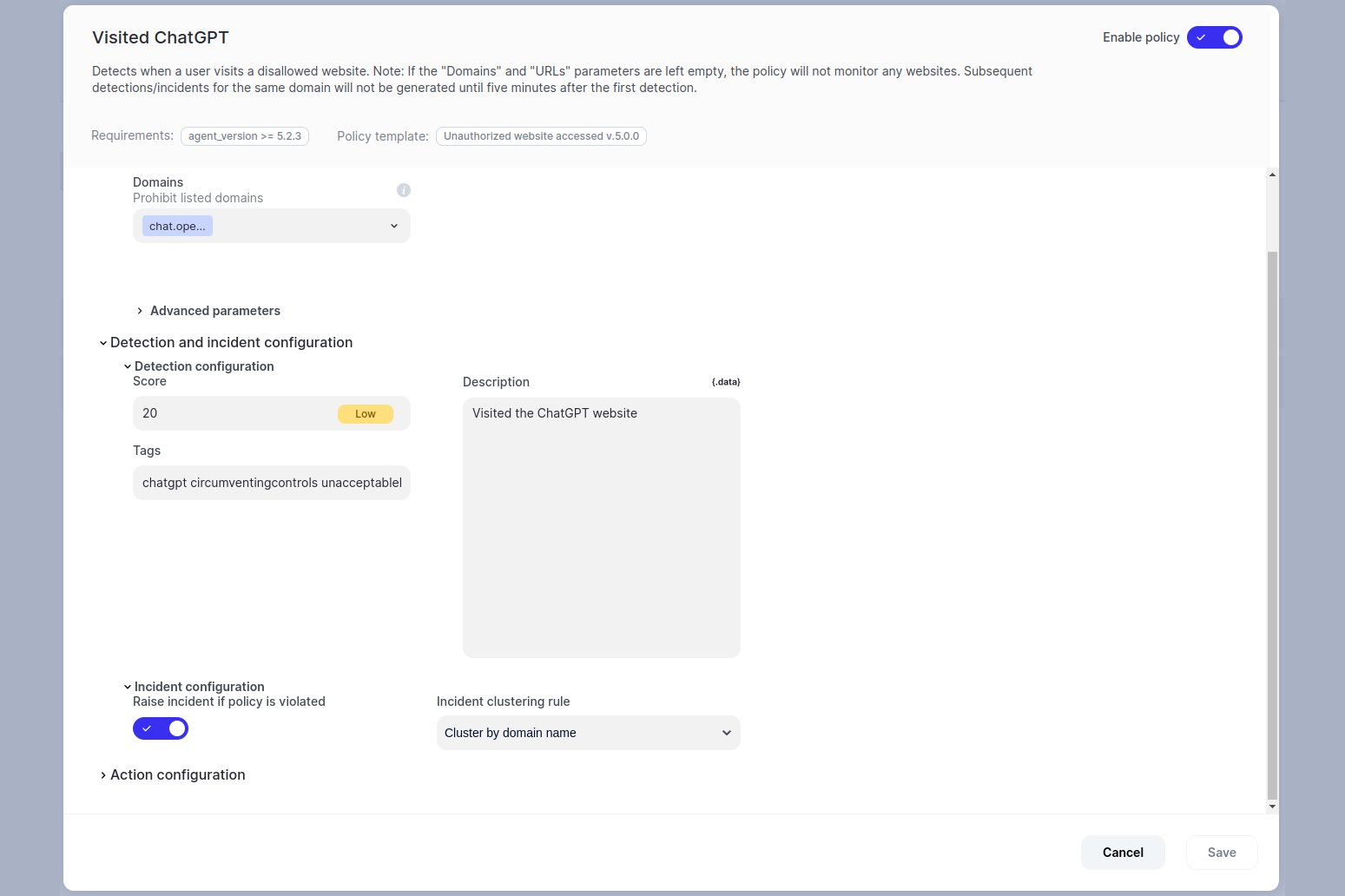

The Reveal platform is based around a highly configurable policy infrastructure. While we provide a set of pre-configured policies out of the box to address common data protection and regulatory requirements, our policy templates are also highly configurable. They can be adapted to address ever-changing threats to an organization's threat landscape. In other words, policies can be customized to solve completely novel challenges.

In the case of ChatGPT, Reveal’s policy builder allows system administrators to weigh different behaviors related to the AI tool. Policies at their lightest can be set to encourage good behavior and, at their most strict, block specific activities or any interaction at all. For example, a policy could be configured where visiting chat.openai.com triggers a warning message reminding the user that any data shared with the chatbot effectively becomes public by entering the system’s training model. Another example is allowing the tool to be used but limiting the type of information that can be entered into the learning data set, like top-secret project names or proprietary code sets.

It is often believed that protecting sensitive data and adopting new technologies and practices are antithetical, a byproduct of legacy strategies in data loss prevention. At Next, we reject that assumption. When the data protection software is flexible and easily configurable, new policies targeting data as it is in use and in motion enable businesses to stay nimble while protecting their most valuable assets.

1 Research methodology: Next used anonymized data in the Reveal platform to inform ChatGPT usage and the development of the policy templates.

Blog

Blog

Blog

Blog

Resources

Resources

Resources

Resources