Fortinet Acquires Next DLP Strengthens its Top-Tier Unified SASE Solution

Read the release

Organizations must be able to identify sensitive data before protecting it, but how long before implementation is complete visibility required? All legacy data loss prevention (DLP) solutions take the same approach to this need: identify it, classify it, find it, protect it. We’ve grown impatient with legacy methodologies, and believe there’s a better way to prevent data loss. But before getting to our philosophy, let’s take a look at legacy DLP practices and where they fall short.

First, legacy solutions must identify data categories: the different types of data the organization handles. This may include personally identifiable information (PII), personal health information (PHI), financial data, intellectual property, partner data, trade secrets, or any other data categories relevant to the organization.

Next, they need to determine the criteria by which to classify the data. This could include factors like data sensitivity, confidentiality, regulatory requirements, impact on the organization if compromised, among countless others, depending on the business.

Finally, legacy DLP solutions require that organizations scan all systems and data stores to identify, classify, and tag all data, in structured and unstructured form — before data protection can begin.

The process of classifying data is time-consuming and resource intensive. Requiring organizations to pre-classify data can take months of effort during which sensitive data continues to be at risk. Unfortunately, this is a continuing problem with traditional classification methods as newly created data or data types may not be included in existing information security programs.

Data discovery and classification exercises can impact business processes from increased overhead on the endpoint. Endpoints experience performance degradation during data discovery, the more frequently discovery is performed, the more frequent these issues can impact the end user. This can be a source of frustration for users during time sensitive business processes like customer service interactions or financial transactions.

Another issue with traditional data classification approaches is handling unstructured data. Many legacy DLP solutions were designed when structured databases held most sensitive data, including PII, PHI, customer lists, and financial data. Today, 80 to 90 percent of data generated by organizations is unstructured. Sensitive data contained in unstructured formats including emails, chat messages, screenshots, presentations, images, videos, and audio files are ignored by systems that focus only on structured data. This means a legacy approach may only be seeing and protecting 10-20% of your organization’s data.

Pre-classifying data delays time to value and protection while increasing expenses and overhead. Data at rest is not at risk until a user – legitimate or malicious – accesses it. Therefore, a better approach is to classify data automatically as it is created, accessed, and used.

Next Reveal eliminates the need for pre-classification by moving machine learning to each endpoint, simplifying DLP and insider risk protection and accelerating time to value. It automatically identifies and classifies sensitive data as it is created or accessed.

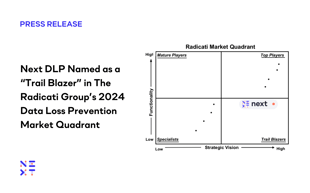

Reveal’s real-time data classification considers content and context to identify and classify data as it is created and used. Content level inspection identifies patterns for PII, PHI, PCI, and other fixed data types. Contextual inspection identifies sensitive data in both structured and unstructured data. Contextual inspection can be based on the location of the data, in the case of design documents, source code, and financial reports. As noted by the Radicati Group in their Market Quadrant for Data Loss Prevention, Reveal’s real-time data classification works “without the need for predefined policies.”

Organizations invest in DLP and insider risk management solutions because of threats to sensitive data that exist today. Waiting months to build schema, then identify and classify data – all while that information remains vulnerable to attack – is a luxury few organizations can afford.

Reveal’s real-time data classification solves this problem. Reveal deploys in minutes and begins identifying sensitive data as soon as users access the data. Its policy free approach enables rapid time to value and eliminates workflow disruptions due to outdated granular policies. Machine learning on each endpoint allows Reveal to baseline each user in days instead of months to understand acceptable behavior and report on risks to data without preset rules.

Book a demo or contact Next to learn how your company can deploy a single solution to protect your sensitive data from insider threats and external attacks.

Traditional data classification methods are outdated because they rely on a time-consuming, resource-intensive process of pre-classifying data before protection can begin. This approach delays the implementation of data protection, leaving sensitive data at risk during the classification process.

Plus, traditional methods struggle to handle the vast amounts of unstructured data modern organizations generate.

Traditional data classification methods struggle with unstructured data because they’re designed for systems that store sensitive data in structured databases. This approach was common two decades ago, but doesn’t hold up in a modern context.

Today, unstructured data formats, including emails, chat messages, images, videos, and audio files, comprise most organizations' data. Legacy solutions often ignore these formats, protecting only a small fraction of an organization’s data.

Real-time data classification offers several advantages:

Modern data classification has so many benefits, including:

Legacy DLP solutions have several limitations:

Blog

Blog

Blog

Blog

Resources

Resources

Resources

Resources